Quality Assurance Image Library

This is my carefully curated collection of Slack images, designed to perfectly capture those unique QA moments. Whether it's celebrating a successful test run, expressing the frustration of debugging, or simply adding humor to your team's chat, these images are here to help you communicate with personality and style.

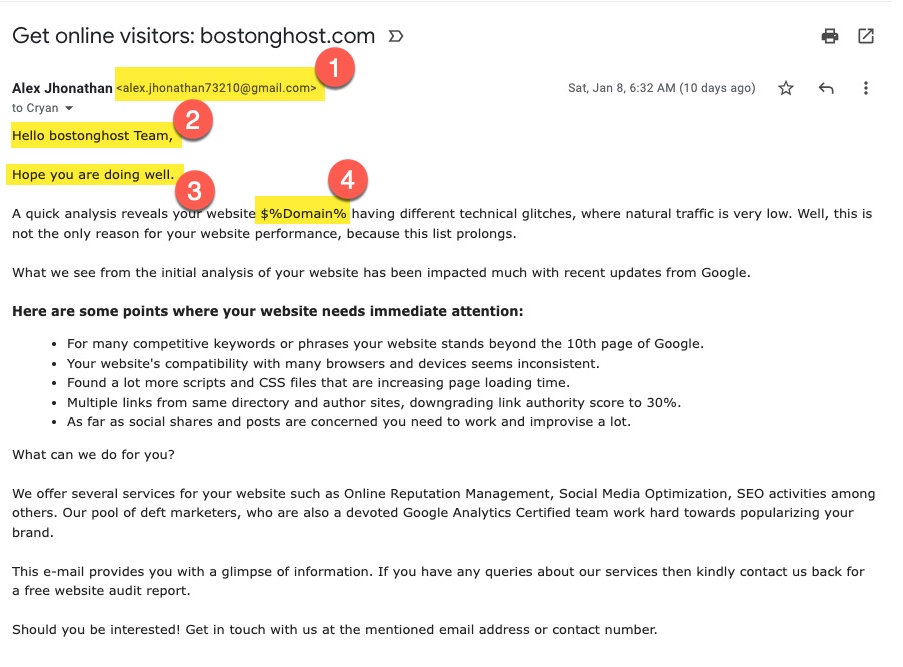

BostonGhost.com Email

A long time ago, I purchased the BostonGhost.com domain to use for testing. I never did anything with it, but still have the domain thanks to a hosting provider that I worked at.

Every once in a while I would get business inquiries about the domain. Usually, it's a sign of mass mailing, and the sender doesn't pay much attention to the potential client.

This is one such email that I recently got:

QA Fails the Email

Here's are four reasons that QA fails this email:

- Why would I trust an email coming from gmail.com? Do they not have a legitimate business? Shouldn't the sender be using their domain name? If you are so good, wouldn't you have a domain name-

- Nice generic hello statement. You couldn't capitalize the domain name-

- "Hope Your Doing Well?" Like you really care how I am doing?

- Nice that you keep the variable placeholder in the email. That clearly shows that you pay attention to your emails. I certainly want to trust my Social Media Optimization and SEO to your company.

We All make Mistakes

I didn't call out the name of this company. We all make mistakes.

You should always test your email campaign before sending it out. At least have a second set of eyes to offer some constructive feedback.

Want to know why your conversion rate is low? You're not testing.

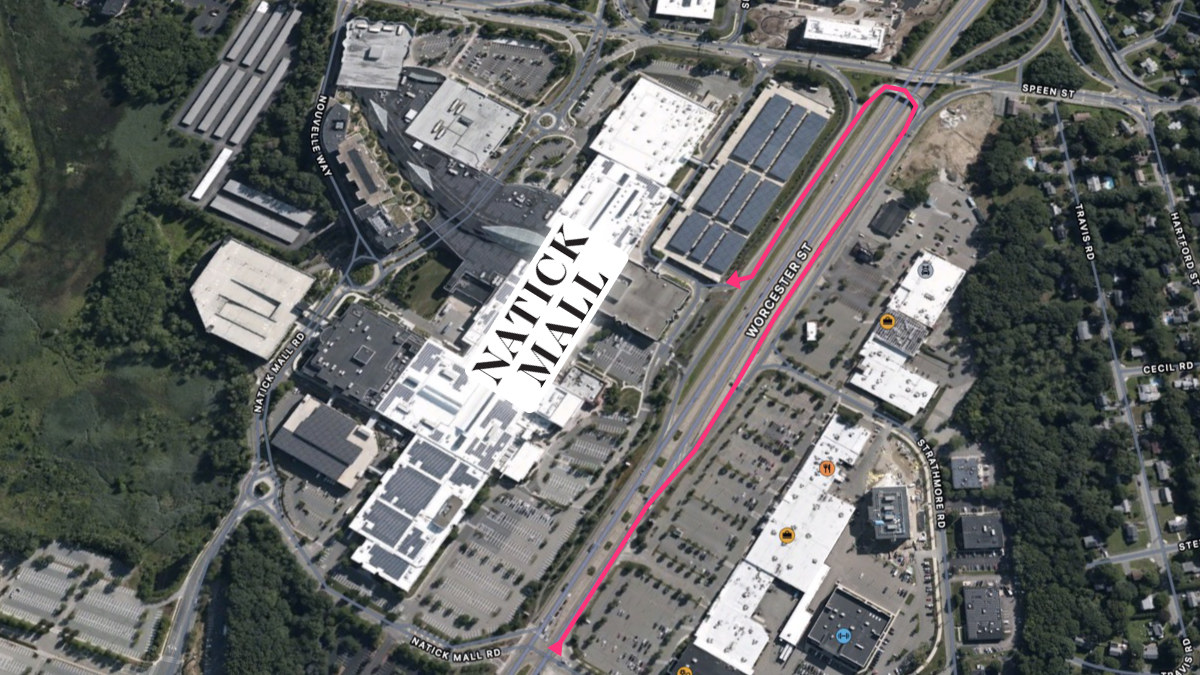

QA Fail: Natick Mall Exit Sign

Natick Mall is one of the busiest Malls in Massachusetts. You can find all sorts of interesting stores and eating places.

If your drive eastbound on Route 9 heading to the Natick Mall, it can be a bit tricky since there's no direct exit to the mall.

You can't take a left at a light to go to the Mall.

When you get to the Mall, you may see this sign:

QA Fails this sign

Not sure who this sign is for - it's pretty small and easily missed with all the other distractions.

The sign is incorrect. The next "exit" is a traffic light and taking a left will put you in Sherwood plaza across the street from the Natick Mall.

You need to be in the right lane to get to the Natick Mall which is on the left side of the road. You can't turn left at the lights - where you would think you would. The sign is too close to the light - so a car in the left lane would have to cross a couple of lanes to take the proper exit.

Oh, if you miss your exit? It's a 1-mile penalty to do a U-Turn back to the Mall.

Map to Show The Way

Here's a map to show the proper way to get to the Natick Mall parking lot:

Basically you have to drive by Sherwood Plaza and take the Speen St. Exit and the take the 9 West Framingham route. You'll end up making a U-turn and positioning yourself to several parking areas at the Natick Mall.

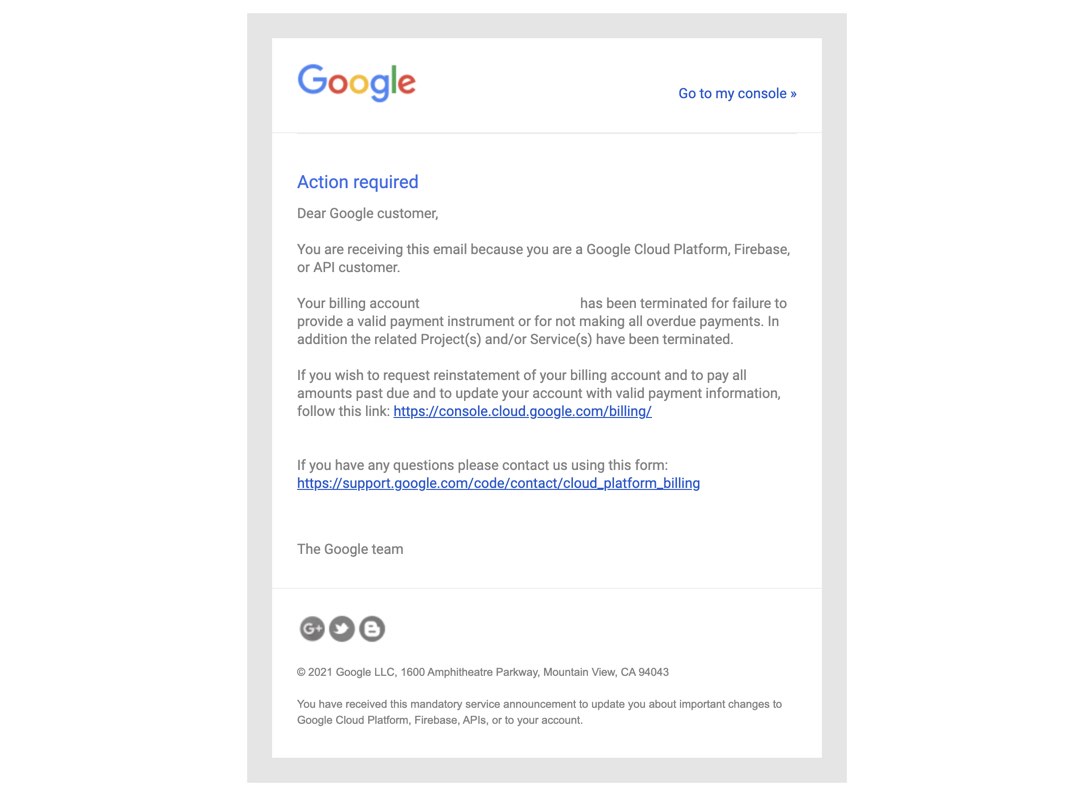

Google Cloud Termination Email

Recently I was test Google Cloud to see how it compares to the features of Amazon Web Services. This was using a self training program that I did for work.

I created a personal account so that I had more flexibility.

I only used Google Cloud during the trial period and was not required to put in a card for the trial - which was good because I really didn’t have any intentions to make a purchase.

A couple of weeks later I get this email:

Text of the email:

Action required

Dear Google customer,

You are receiving this email because you are a Google Cloud Platform, Firebase, or API customer.

Your billing account XXXXX has been terminated for failure to provide a valid payment instrument or for not making all overdue payments. In addition the related Project(s) and/or Service(s) have been terminated.

If you wish to request reinstatement of your billing account and to pay all amounts past due and to update your account with valid payment information, follow this link: https://console.cloud.google.com/billing

If you have any questions please contact us using this form: https://support.google.com/code/contact/cloud_platform_billing

Why QA Failes

This email sounds very threatening and somewhat demanding. I had no files in the Google Cloud.

Why mention, "pay all amounts past due" when there wasn't anything to payback? I was using it during the allowed trial period.

Once someone is done with the trial, or if I feel that it isn’t for them, there should be a way to cancel.

I never got a "Termination" email from Amazon Web Services. My account was just moved to the AWS Free Tier.

QA Memes that Sum up 2021

These are the Six QA memes that pretty much sum up 2021.

Romantic Candlelight Dinner…

http://www.cryan.com/qa/graphics//ProductionBug.jpg

Working from Home means that you’re always on the clock. There’s nothing like getting a critical text message or phone call of an issue in Production.

Added Logging

http://www.cryan.com/qa/graphics//log4jMeme.png

December was looking quiet until the log4j issue blew up. Who knew that having logging could cause some vulnerabilities? Logging in Production used to be good, not anymore.

Using Jira doesn't make you agile

http://www.cryan.com/qa/graphics//JiraAgile.jpg

Where I work, Agile sprints are sometimes confused with Waterfall sprints. You can be productive using Agil, just make sure to stick with the program.

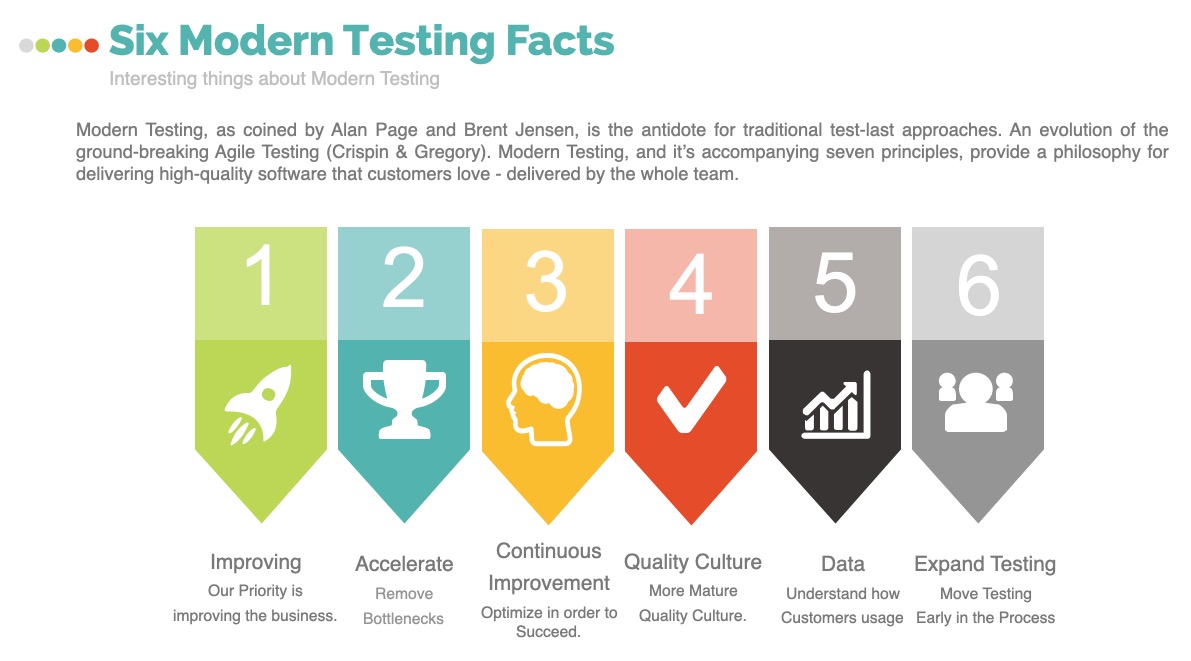

Modern Testing Meme

http://www.cryan.com/qa/graphics//ModernTestingGraphic.jpg

This year I learned a lot about Modern Testing and how it can help improve the sprint velocity of any team. Modern Testing moves the ownership of testing to Developers where QA focuses on Quality.

Stand Back "Back to the Future" - Testing in Production

http://www.cryan.com/qa/graphics//meme-stand-back-test-in-production.jpg

Lots of testing in Production in 2021 - at least on various projects that I worked on this year. There’s so much risk of testing code in Production - but sometimes you don’t have that choice.

Going to QA

http://www.cryan.com/qa/graphics//GoingToQA.jpg

In 2021, getting code to QA is a big deal. Developers seemed to be confident that if it passed code review, it should have smooth sailing in QA. That wasn't always the case as QA tend to test functionality more than logic.

Send it to QA, what could possibly go wrong?

QA Meme Additions

Here’s this month’s Meme collection for Quality Assurance testing. Enjoy,

Make sure to check out all the QA Memes collection in the QA Graphic Library.

Yes, You Are Correct

http://www.cryan.com/qa/graphics/YesCorrect.jpg

Bernie Sanders asking for AWS to be back up

http://www.cryan.com/qa/graphics/BernieSandersAWS.jpg

Release Moratorium

http://www.cryan.com/qa/graphics/ReleaseMoratorium.jpg

The Scroll of the Truth

http://www.cryan.com/qa/graphics/ScrollofTruth.jpg

LogJam in Early 2021

http://www.cryan.com/qa/graphics/ChangeMyMindLogJam.png

Homer is Happy Dev is Ready

http://www.cryan.com/qa/graphics/WooHooDevisReady2021.jpg

QA Memetober #4

Here's the final entry for QA Memetober. There are four more included in the QA Graphic Library.

Happy Release Day

http://www.cryan.com/qa/graphics/2021/HappyReleaseDay.jpg

Moments before a Release

http://www.cryan.com/qa/graphics/2021/MomentsBeforeRelease.jpg

Bug Free Build

http://www.cryan.com/qa/graphics/2021/BugFreeBuild.jpg

QA Test Cycle

http://www.cryan.com/qa/graphics/2021/QACycle.jpg

Remember to check out all the QA Memes in the QA Graphic Library. (Quite Possibly the Largest QA MEME collection online!)

QA Memtober #3

Here are some Meme type images that I created for this week's edition of Memetober.

You're Still Here?

What is the Expected Result?

What is the Expected Result?

Tested Using Google Incognito Mode

Testing Code Meme

This week's "Memetober" theme is "Testing code when it gets to QA" and dealing with the "Test Case Repository"

QA Memetober

On Tuesdays in October, I'll post 2 QA Memes. These will be practical memes that I use on a frequent basis. The goal is to focus on quality content, and not just throw up a bunch of graphics.

Good Luck with Your Testing

Ready for QA

September QA Memes

Here's the traditional addition to the QA Graphic Library.

https://www.cryan.com/qa/graphics/september2021/CodeFreezeTime.jpg

https://www.cryan.com/qa/graphics/september2021/QAHomerSimpson.jpg

https://www.cryan.com/qa/graphics/september2021/ReleaseDayTomorrow.jpg

https://www.cryan.com/qa/graphics/september2021/TimeForTesting.jpg

Be sure to check out the library for additional graphics added this month.

About

Welcome to QA!

The purpose of these blog posts is to provide comprehensive insights into Software Quality Assurance testing, addressing everything you ever wanted to know but were afraid to ask.

These posts will cover topics such as the fundamentals of Software Quality Assurance testing, creating test plans, designing test cases, and developing automated tests. Additionally, they will explore best practices for testing and offer tips and tricks to make the process more efficient and effective

Check out all the Blog Posts.

Blog Schedule

| Tuesday | QA |

| Wednesday | Veed |

| Thursday | Business |

| Friday | Macintosh |

| Saturday | Internet Tools |

| Sunday | Open Topic |

| Monday | Media Monday |

Other Posts

- QA Memetober

- Browser Calories

- Finding the Right Balance

- Early Evaluation

- QA Memes

- QA Interview Questions

- Google Testing Blog

- Importance of "Cowboy Up" Mentality

- Engineering Liability of Code

- QA Halloween Testing

- QA Info Bar Bookmarklet

- All Quality is Contextual

- Four Tips for Writing Quality Test Cases for Manual Testing

- Stealth Mode Deployment vs Release Canaries

- Test Description